Finding Eigenvalues

How these little guys actually get computed

In AI, eigenvectors and eigenvalues are everywhere. You see them in places like principal component analysis1, RNN stability analysis2, and optimization analysis3. For a square matrix A, an eigenvector is a vector v, along with a corresponding scalar λ, the eigenvalue, such that:

The key idea is that applying the linear transformation A to the eigenvector v scales the vector’s magnitude, but otherwise leaves it untransformed. I recently dug into how eigenvectors and eigenvalues get computed in practice, and I wanted to share it, because it was fairly unintuitive, and actually kind of cool.

First, it’s worth sharing how I learned to compute eigenvalues back in school, since you might have seen this in a linear algebra class somewhere. Note that:

Assuming v is a non-zero eigenvector, this then implies that

For an n×n matrix A, this determinant expression translates into a nth order polynomial of λ. The roots of this equation are the eigenvalues of A. For a given eigenvalue λi of A, you can find the corresponding eigenvector by plugging this into the equation and solving for vi:

That’s all great, but that doesn’t tell you how to actually compute the roots of the polynomial. I did some digging and discovered that standard root-finding algorithms like Matlab’s4 find the roots by finding eigenvalues of the polynomial’s companion matrix5, so this seems circular:

To find the eigenvalues of a matrix, just find the roots of a corresponding polynomial.

To find the roots of a polynomial, just find the eigenvalues of a corresponding matrix.

I decided to go down the rabbit hole and get to the bottom of this. Here’s a quick summary of how modern libraries actually find eigenvalues.

Triangular Matrices

Note that if you have an upper-triangular matrix, like:

then the eigenvalues are just the diagonal entries of the matrix, since:

This is key, because the general algorithm for finding eigenvalues transforms the matrix into a triangular matrix with the same eigenvalues. Once you’ve done this, your done, since you can just read the diagonal entries as the eigenvalues.

Matrix Similarity

Two matrices A and B are similar6 if there exists an invertible matrix P, such that

A key property of similar matrices is that they have the same eigenvalues. So the basic idea of finding eigenvalues for A is to find a similar matrix to A that’s also upper triangular.

Schur decomposition

For any square matrix A, there exists an orthogonal matrix Q* and an upper triangular matrix H such that

Q* being orthogonal is just a fancy way of saying:

Since H is upper triangular, once you have the Schur decomposition, the eigenvalues are just the diagonal entries of H.

The tricky part is computing the Schur decomposition. To get that, modern eigenvalue solvers use a simpler matrix decomposition: the QR decomposition7. The QR decomposition is just a breakdown of A into an orthogonal matrix Q and an upper triangular matrix R:

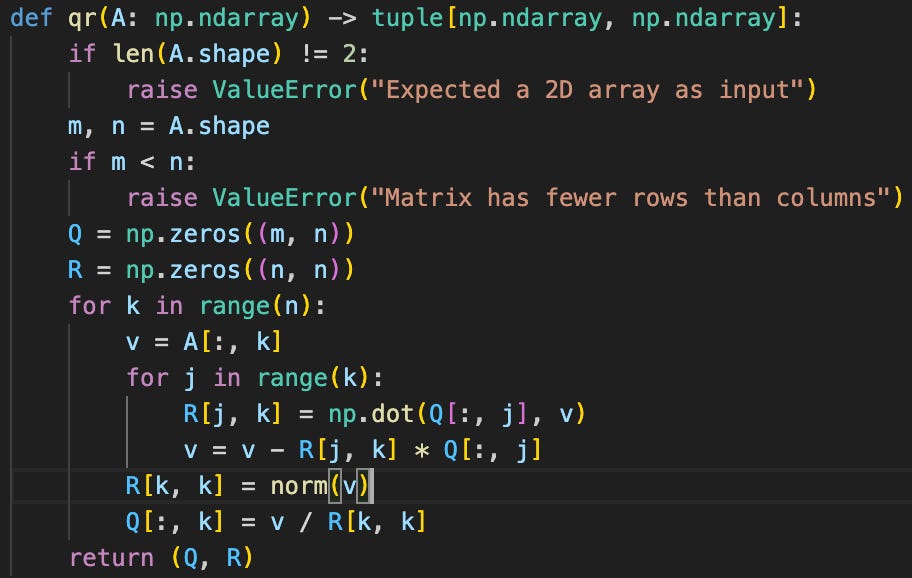

QR decomposition is straightforward. A simple implementation using the Gram-Schmidt8 process is shown here:

A more popular implementation uses Householder reflections9. Householder reflections are a bit less straightforward than Gram-Schmidt, but they are the de facto implementation, because they have better numerical stability.

There’s a neat property of QR decomposition that lets you use it to find the Schur decomposition. First, note that for an orthogonal Q:

QTAQ is a similar matrix to A. If you set:

you then get a sequence (A0, A1, …) of similar matrices to A. This sequence has the nifty property10 that

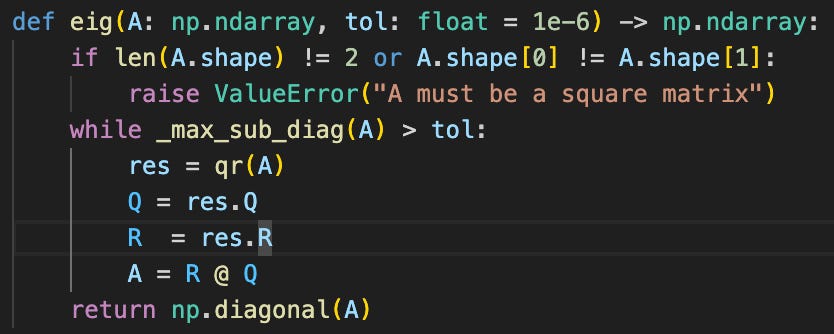

where Q* is the orthogonal matrix from the Schur decomposition, and H is the upper-triangular matrix Schur matrix. This gives you your eigenvalues, from it’s diagonals. This means you can use code like this to find the eigenvalues:

This is not too different than what production eigenvalue solvers do. There are a couple of key changes they make, for better performance, however:

Before the first step, they transform A into an upper Hessenberg11 form. This is a one-time transformation that costs O( n3 ) time, but it then reduces the time of each QR step in the loop from O( n3 ) to O( n2 ).

At each step, they perform a Wilkinson shift12. This is a transformation to the matrix that makes the overall loop go from having linear convergence to quadratic convergence, for the general case, and cubic convergence when A is symmetric.

Pearson, K. (1901). LIII. On lines and planes of closest fit to systems of points in space . The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science, 2(11), 559–572. https://doi.org/10.1080/14786440109462720

Panda, Priyadarshini, Efstathia Soufleri, and Kaushik Roy. "Evaluating the Stability of Recurrent Neural Models during Training with Eigenvalue Spectra Analysis." 2019 International Joint Conference on Neural Networks (IJCNN). IEEE, 2019.

Ghorbani, B., Krishnan, S. & Xiao, Y.. (2019). An Investigation into Neural Net Optimization via Hessian Eigenvalue Density. <i>Proceedings of the 36th International Conference on Machine Learning</i>, in <i>Proceedings of Machine Learning Research</i> 97:2232-2241 Available from https://proceedings.mlr.press/v97/ghorbani19b.html.

MathWorks. “Roots – Polynomial Roots – MATLAB.” MATLAB Documentation, MathWorks, www.mathworks.com/help/matlab/ref/roots.html. Accessed 26 May 2025.

“Companion Matrix.” Wikipedia, Wikimedia Foundation, https://en.wikipedia.org/wiki/Companion_matrix. Accessed 26 May 2025.

“Matrix Similarity.” Wikipedia, Wikimedia Foundation, https://en.wikipedia.org/wiki/Matrix_similarity. Accessed 26 May 2025.

“QR Decomposition.” Wikipedia, Wikimedia Foundation, 9 May 2025, https://en.wikipedia.org/wiki/QR_decomposition. Accessed 26 May 2025.

“Gram–Schmidt Process.” Wikipedia, Wikimedia Foundation, https://en.wikipedia.org/wiki/Gram%E2%80%93Schmidt_process. Accessed 26 May 2025.

“Householder Transformation.” Wikipedia, Wikimedia Foundation, https://en.wikipedia.org/wiki/Householder_transformation. Accessed 26 May 2025.

Falk, Richard S. “Convergence of the QR Algorithm.” Math 574 Lecture Notes, Rutgers U, 2004, pp. 32‑33. sites.math.rutgers.edu

“Hessenberg Matrix.” Wikipedia, Wikimedia Foundation, https://en.wikipedia.org/wiki/Hessenberg_matrix. Accessed 26 May 2025.

Yang, Ming-Hsuan. “Lecture 17.” EECS 275: Matrix Computation, University of California, Merced, https://faculty.ucmerced.edu/mhyang/course/eecs275/lectures/lecture17.pdf. Accessed 26 May 2025.